USD - US Dollar

Currency

- USD - US Dollar

- EUR - Euro

- GBP - Pound Sterling

- AUD - Australian Dollar

- CAD - Canadian Dollar

- JPY - Japanese Yen

- HKD - Hong Kong Dollar

- TWD - Taiwan dollar

- CHF - Swiss Franc

- CZK - Czech Koruna

- DKK - Danish Krone

- HUF - Hungarian Forint

- ILS - New Israeli Shekel

- MXN - Mexican Peso

- NOK - Norwegian Krone

- NZD - Zealand Dollar

- PHP - Philippine Peso

- PLN - Polish Zloty

- SEK - Swedish Krona

- SGD - Singapore Dollar

- THB - Thai Baht

Optical Data Center Interconnect(DCI) Solutions

The optical data center interconnect infrastructure is mainly composed of switches and servers, meanwhile fiber optic cables and optical transceivers, or active optical cables and direct attach cables are also used for the connection. In data centers, the explosive growth of the traffic is driving the data rate of optical transceivers to escalate and accelerate. It has taken 5 years from 10G to 40G, then 4 years from 40G to 100G, and may likely take only 3 years from 100G to 400G. All the export data in the future data centers need to go through the internal mass operation (especially the rising internal and export flow of AI, VR/AR, UHD video, and so on). The flow of the east-west direction in the data center is turbulent, and the flat data center architecture makes the 100G optical transceiver market continue to grow at a high speed.

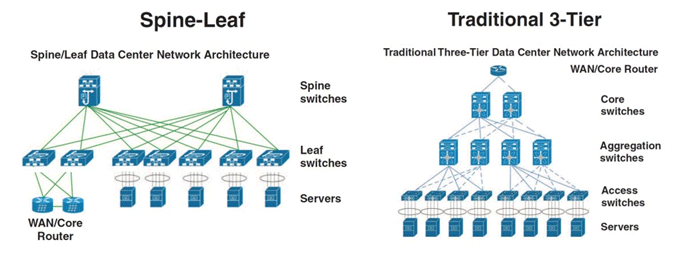

The direction of the main data flow of traditional 3-tier data center is from top to bottom or from south to north, while the direction of the main data flow of flat spine-leaf data center is from east to west.

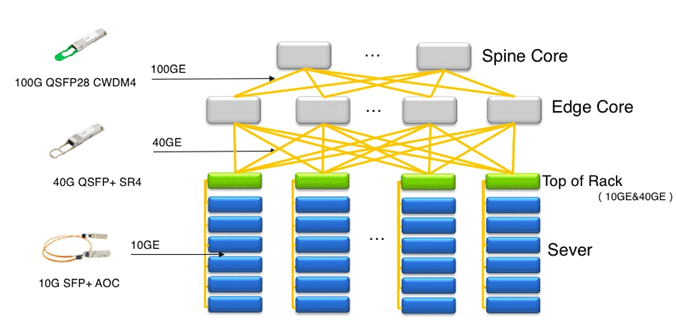

Here is a data center optical interconnect application case of optical transceivers and AOC. The network architecture of a Cloud Data Center is pided into Spine Core, Edge Core, and ToR (Top of Rack). The 10G SFP+ AOC is used for the interconnection between the ToR access switches and the server NICs. The 40G QSFP+ SR4 optical transceivers and MTP/MPO cables are used for the interconnection between the ToR access switches and the Edge Core switches. The 100G QSFP28 CWDM4 optical transceivers and duplex LC cables are used for the interconnection between the Edge Core switches and the Spine Core switches.

| Upgrade Path | 2008–2014 | 2013–2019 | 2017–2021 | 2019~ |

| Data Center Campus | 40G-LR4 | 40G-LR4 100G-CWDM4 | 100G-CWDM4 | 200G-FR4 |

| Intra-Building | 40G-eSR4 4x10G-SR | 40G-eSR4 | 100G-SR4 | 200G-DR4 |

| Intra-Rack | CAT6 | 10G AOC | 25G AOC | 100G AOC |

| Sever Data Rate | 1G | 10G | 25G | 100G |

According to the difference in the rate of increase in flow, network architecture, reliability requirements and the environment of the machine room compared to telecommunication networks, the demand for optical transceivers of cloud data centers has the following characteristics: shorter iteration period, higher speed, higher density, lower power consumption and use by mass.

Shorter iteration period

The rapid growth of data center traffic is driving the upgrading of optical transceivers in acceleration. The iteration period of data center hardware devices including optical transceivers is about 3 years, while the iterative period of telecommunication optical transceivers is usually over 6-7 years.

Higher speed

Because of the explosive growth of data center traffic, the technology iteration of optical transceivers can not catch up with the demand, and almost all the most advanced technologies are applied to data centers. For higher speed optical transceivers, there is always a demand for data centers, and the key question is whether the technology is mature or not.

Higher density

The high density core is to improve the transmission capacity of the switches and the single boards of the servers, in essence, to meet the demand of high speed increasing flow. At the same time, the higher the density is, the less switches are needed be deployed, and the resources of the machine room can be saved.

Lower power consumption

The power consumption of the data center is very large. Lower power consumption is to save energy and ensure better heat dissipation. Because there are full of optical transceivers on the backboards of the data centers, if the heat dissipation problem can not be properly solved, the performance and density of the optical transceivers will be affected.